Method

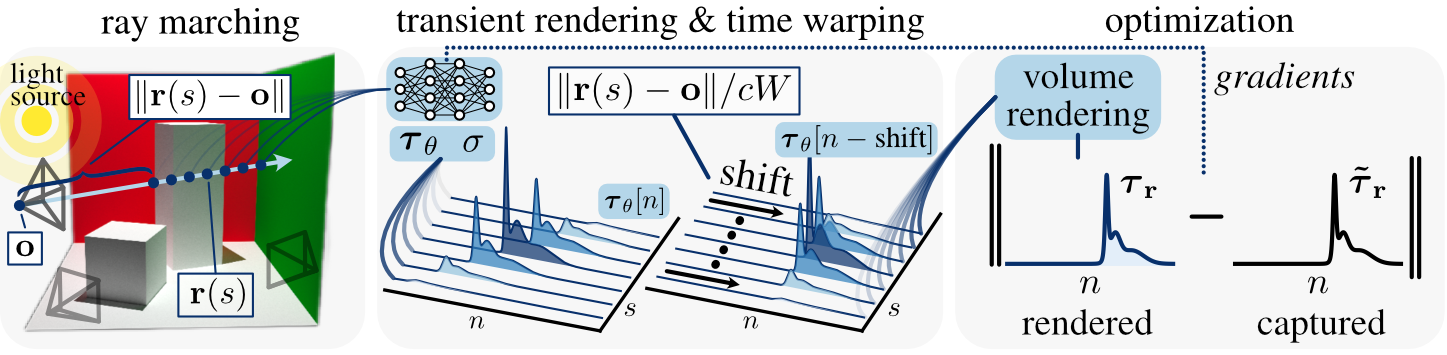

To render transient videos from novel viewpoints, we cast rays into the scene and use a neural representation to learn two fields: a conventional density field and the transient field: a mapping from a 3D point and 2D direction to a high-dimensional, discrete-time signal that represents the time-varying radiance at ultrafast timescales. That is, for every ray sample, the neural representation returns a transient with bins indexed by n. To account for the distance between the camera viewpoint and the sample point, we shift each transient in time by the corresponding speed-of-light time delay. If this delay were not explicitly modeled, the representation would need to memorize the camera–scene propagation delay to each sample. The representation is supervised by minimizing the difference between rendered and captured transient videos.