Flying with Photons:

Rendering Novel Views of Propagating Light

This synthesized flythrough shows a pulse of light traveling through a coke bottle. The whole event spans roughly 3ns, which is 100 million times less than it takes us to blink. Light can be seen scattering off liquid, hitting the ground plane, focusing on the cap and reflecting to the back.

Abstract

We present an imaging and neural rendering technique that seeks to synthesize videos of light propagating through a scene from novel, moving camera viewpoints. Our approach relies on a new ultrafast imaging setup to capture a first-of-its kind, multi-viewpoint video dataset with picosecond-level temporal resolution. Combined with this dataset, we introduce an efficient neural volume rendering framework based on the transient field. This field is defined as a mapping from a 3D point and 2D direction to a high-dimensional, discrete-time signal that represents time-varying radiance at ultrafast timescales. Rendering with transient fields naturally accounts for effects due to the finite speed of light, including viewpoint-dependent appearance changes caused by light propagation delays to the camera. We render a range of complex effects, including scattering, specular reflection, refraction, and diffraction. Additionally, we demonstrate removing viewpoint-dependent propagation delays using a time warping procedure, rendering of relativistic effects, and video synthesis of direct and global components of light transport.

Video

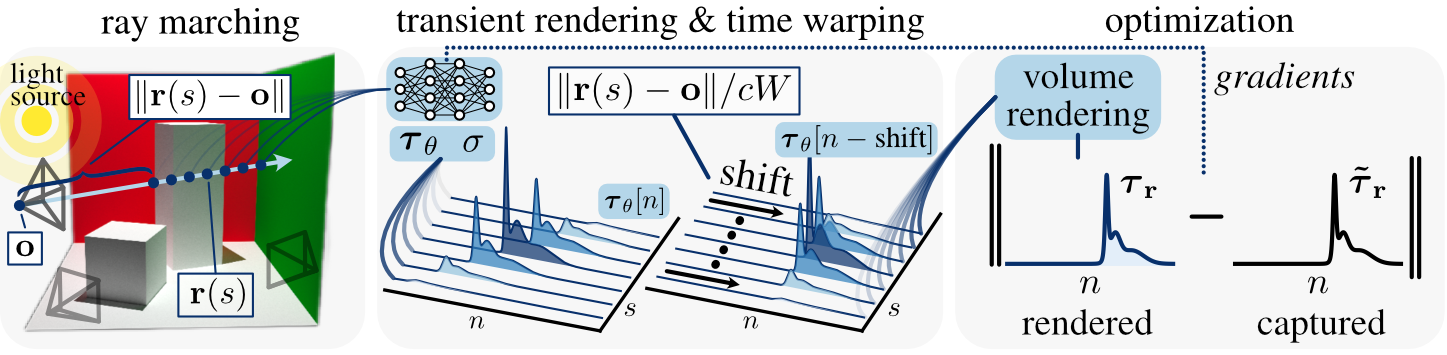

Method

To render transient videos from novel viewpoints, we cast rays into the scene and use a neural representation to learn two fields: a conventional density field and the transient field: a mapping from a 3D point and 2D direction to a high-dimensional, discrete-time signal that represents the time-varying radiance at ultrafast timescales. That is, for every ray sample, the neural representation returns a transient with bins indexed by n. To account for the distance between the camera viewpoint and the sample point, we shift each transient in time by the corresponding speed-of-light time delay. If this delay were not explicitly modeled, the representation would need to memorize the camera–scene propagation delay to each sample. The representation is supervised by minimizing the difference between rendered and captured transient videos.

Novel View Flythroughs

Scene Image

Transient Flythrough

This captured scene is illuminated from the side by a pulse from a point source. The propagation reveals subsurface scattering on the candles and interreflections.

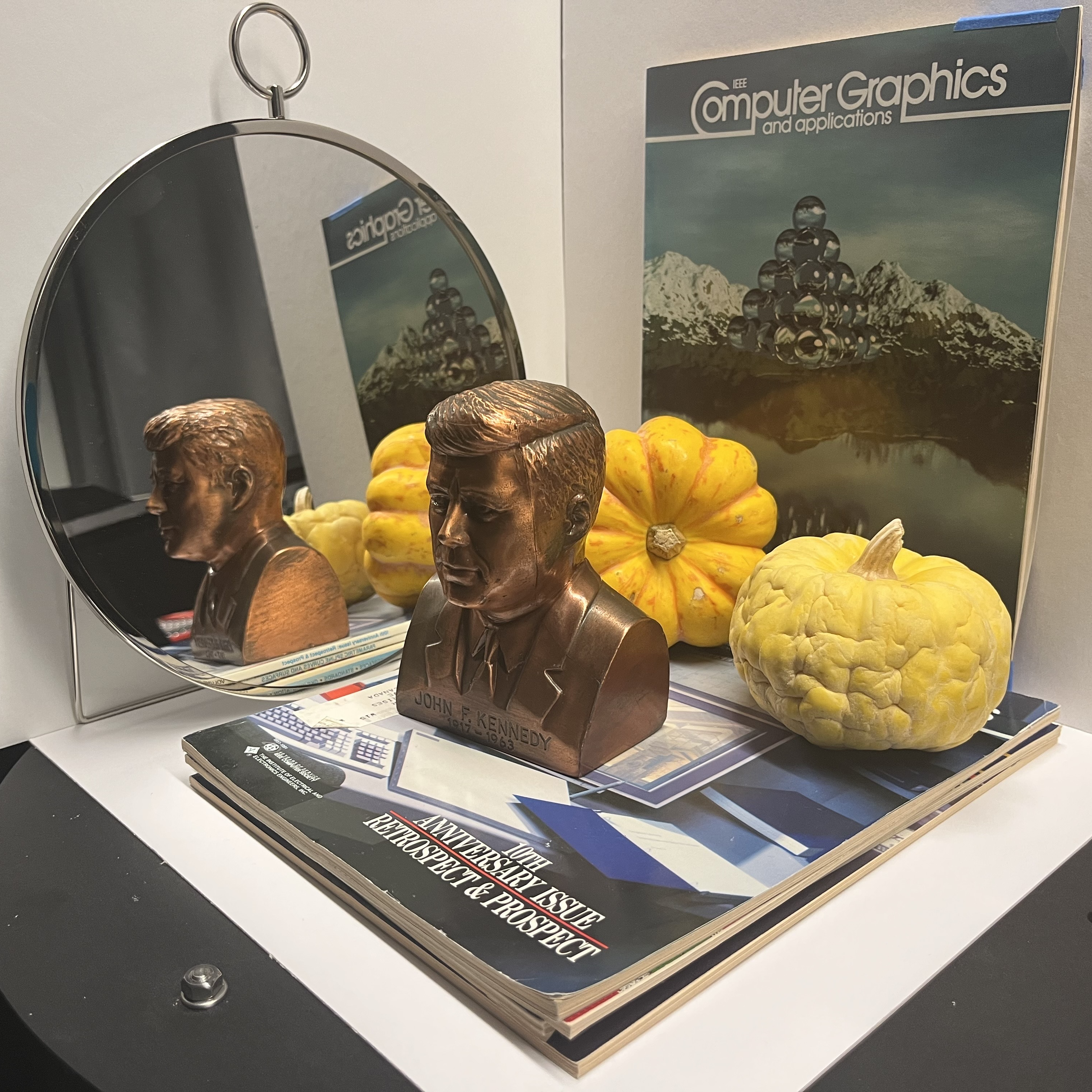

Scene illuminated by a point source, consisting of a mirror, statue, and multiple other objects. The transient reveals the delay in the propagation on the mirror and interreflections.

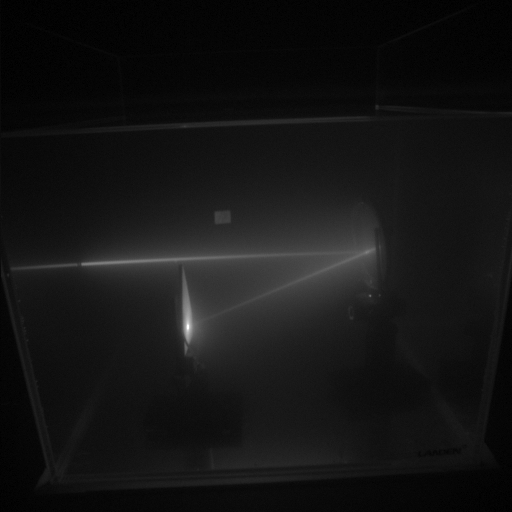

A mirror and diffuser placed in a tank of cloudy water. We illuminate the scene with a laser beam. We can observe the specular reflection of the laser and scattering off the diffuse target.

Comparison to baselines

Ground Truth

Transient NeRF

K-Planes

Ours

This simulated scene contains a point light source and a refractive object placed in participating media.

This simulated scene contains a refractive object and two peppers illuminated by a point source of light.

Direct and Global Separation

We estimate direct and global components for each transient video and then retrain our neural representation separately on both components. Here, we see indirect reflections in the global component, and the candles appear bright because of the strong sub-surface scattering component.

Direct

Global

Unwarping

To create a more intuitive visualization, we shift the rendered transients to remove the propagation delay from the scene to the camera. This removes the viewpoint-dependent appearance of the transients. Here, without warping the wavefronts exiting the Coke bottle on the ground plane travel away from the camera. For the Cornell scene (simulated) there is only one light source at the top, the unwarped version depicts this more intuitively.

Regular

Unwarped

Citation

@article{malik2024flying,

author = {Malik, Anagh and Juravsky, Noah and Po, Ryan and Wetzstein, Gordon and Kutulakos, Kiriakos N. and Lindell, David B.},

title = {Flying with Photons: Rendering Novel Views of Propagating Light},

journal = {ECCV},

year = {2024}

}